Generating Swiss German SMS with a RNN

Every now and then, I read through the fantastic article The Unreasonable Effectiveness of Recurrent Neural Networks and am still amazed by the results Andrej Karpathy got.

He trains a Recurrent Neural Network (RNN) using some input (Shakespeare, LaTeX, Linux source code) and then lets the RNN give a sample of what it learnt, which sometimes is amazingly similar to the input - but completely new! I really recommend reading through the article, it has many details about RNNs and LSTMs which I will not cover.

Instead, I decided to apply them to a new input - Swiss German!

Getting a corpus

At first I wasn't really sure whether there really were any text collections for Swiss German, so I did a bit of corpus searching, and most of them were in plain German. After a bit of research, I found the following two:

- The ArchiMob Corpus, downloadable as XML

- Swiss SMS Corpus, browsable & exportable

I decided to go with the latter, as it had the easiest plain-text access and also because SMS is a fun and recognizable format which has its own quirks like:

- messages have a max. length of 160 characters

- smileys and abbreviations

- opening and ending (like a small letter)

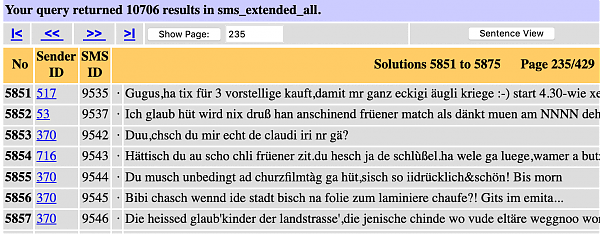

Browsing the corpus, a quick example looks like this:

Source: Swiss SMS Corpus

Source: Swiss SMS Corpus

As of the usage conditions, you can create an account yourself, if you want to dive deep into the corpus. The non-commercial use for research is allowed.

So with this corpus, I ended up with around 10'000 SMS of about 1.2 million characters total, not a huge amount of data, but hopefully enough to train a model!

Training a model

So with some text input available the next step was to train a RNN.

Instead of installing Lua and Torch (which seems to be a bit of a pain on Mac OS), I went with the prepared Docker image from docker-torch-rnn. You can follow the steps used to run in readme, starting with running docker and downloading the image:

docker run --rm -ti crisbal/torch-rnn:base bashYou can then copy your text input file into the docker container (look up the container id with docker container ls)

docker cp input.txt mycontainer:/root/torch-rnn/data/input.txtThen, the input has to be preprocessed before the training:

python scripts/preprocess.py \\

--input_txt data/input.txt \\

--output_h5 data/input.h5 \\

--output_json data/input.jsonFor the actual training, I played around with the parameters (see the docs for the available flags) and got some good result below. You can also use the default rnn_size of 128 which will yield decent results faster, but is also satisfied sooner. I used the following and let it run for a day or so:

th train.lua \\

-input_h5 data/input.h5 \\

-input_json data/input.json \\

-batch_size 100 \\

-rnn_size 256 \\

-dropout 0.5 \\

-gpu -1Then, for getting a sample, you can run:

th sample.lua -checkpoint cv/checkpoint_9600.t7 -length 2000 -gpu -1 Preprocessing, training and getting a sample is really simply and also good described in the README of the docker repo.

(Side note: If you want to try a no-install version yourself, there is a simple 100 line Python script by Andrej Karpathy for training a vanilla RNN which already yields some interesting results.)

Final results

So, let's first have a look at some examples generated by the model!

Hey bisch nicht a?ha währ ev recht spat finde. Wot am sa? tshuflgrätthi. Hihi uhr morn so basster:-) falls ersch probe knabscht gsi. Küssli

Gunte morge froge! Jo...bis gli! Und i hoffe ich lahn hüt de sinder es träffed würd ganz fescht au au und au en schöne tag. Couck kompli? schöne sopine

Hä sich no richtig samschtig sehr uf em geburi zit würkli guät eifach vo mir mache. I bi au zum stadt chunsch! Frög dich scho uf dä 10ni cho hench, wäg de [LastName] Machsch vermüsteli und muess no letscht?

Hoi du, hesch immer über äm vave schmärzä.lüt der en schöne abig chunt und guet abr wers lohnig no mal mal bereich...jo chunsch aber e kauft wäri öpas schaffe.lyebi

Da chasch jetzt gad? Am fritig abe am 14.25 chömr clno, zuger...aber mir hend scho lang name gho? Gösch na den is pässe ko mench dass i offe so du? Muezi nüd guet?ly

Not bad, right?

Examples of nearly real SMS

Before we go into more detail, some more results, but selected ones, not like the above, which were generated in one piece in that order. Here are some "nearly real", whole SMS. They were generated like this and could nearly pass the Turing test:

Hoi Carla! Han gar am di froh uf na halbi 7:35 bis packe... Bi Ich wünsch dr na voll uf ihne mal... Lg jepta

Hej mökele., guet nacht! Und du chasch kei week eifach du vordr mahmmöße! Freu mi! :-*

Hoi hallo, guet N8. Hamr lieb dich widr?ich chume weisch isch s yod debi um bisch dy!:-) emal:-) mer dürk und dass äs guet au bis immer. Okey..greez =)

Ich liebe dich

Hoi mera!wünsch der e schöne abig au!

Isch guet uf de wuche... Es isch woll i'd Hei bei den shuex?:-) freu mi mega soh:-*

Hoi Elina, danke, ich gang ned bis nöd. Merci a de Gf

Sali niess! Glg, alessi, gruess, bis dänn

Hoi du! Nei machemer zötä chan aber nüt au nid?

Du bisch 20.17 fahre? Bin am 10i fertig ab de stell mache, wenn du dörfe morn kuß prisio =)

Hey you, am b.t! I wönsche Der e schöne tag und freu mi sehr sicher voll entwichlig isch nöd bi de Badrege gnüße, blib nid käne sheiss! Glg

hey conri, wenn so es alt. Vermiß di viel spaß..

Guetä Morge, Schätzeli, das'ss wider gsi& wenn grad so znacht oder händ ove xi lieb di!und hesch eu d prüefig, waiß du nöd... Kuss <3

Guete Morge min schatz. Mir chönted chli planet beidis sms lüz ;-) hoffetli no guet gmueterleiszig isch? Ich bin so en grad, also, loss zyt so reda eine au großi im fr... Hbruf dihei verliebt. Umarme und das fascht nöd so vil Spass all da em zug. Lg chrise

Hoi machi! Gruess alika

Liebi Elkeline. Chunsch du au widr? =)

Also! Mueß am 5i dihei? Glg mica

Fun

Some more handpicked, funny messages:

Ha es bett,chunsch au?

Ey geld

Ciao,freu mi und s kino geil!

Ich schaffe soso. Und den chunsch chröt.

Falls guet alles gueti zum Geburtstag! Kuß bis Maus

Bis gli...hoffe mir gahts guet

Hey schmatzel^^ D'pegy.Gruss

Du besch scho paßiert

He. Alles klar? Lg sory. Lg

Hallo schätzeli! Wie gohts dir? Druckers! Chum extra wo tue nüchshe heicharte :-) Natürli ish morn abig....<3

Hallo aff, :-P

Bisoun :-* Ahmen

Magsch mir oi gschrebe!???!

Chum jetzt nid für packe!

Evolution of the model

But for coming this far as the samples above, the model had to be trained, and we can dig into the learning steps it made. The training went up until generation 9600, which gives an idea of what progress it makes over time.

Generation 100:

{~S3bf) dis wasch Oumftad gepFmersch iieh? uenhi. ditg est me napMa 2I g*emluttal uutiwe, u em malz pingle wab wus miche ede. zbaml,!eßcba1yi h os. xohdy is :me el glüsch es beg Hetng anssch weat!. zla bir ez iche obet sanmi gunotzar i hemmes shand mer .ha bolisch sin

nuel! do bamd dölt wi ørere schaugel! Lrdgs!.oi po lisch chad gued

&a chimemki gl uhd s ir pidi sal

litesg OO. Wone

Ea niss kleß-Her monm vu lu beige denznen %o im wiunenx goognile kAt the beginning it just generates seemingly gibberish. Although there already seem to be "words" (separated by space), but they consist of numbers and punctuation too. Also, the length varies very much, also generating messages far too long.

Generation 200:

Vb a gischt choti dr weinto licheriellrisslich slüchage gabfet gertt dim.R P genn schputus ... [Na

Hafkz

giie fötal eiefluere #ÖrlS machli gölt.;Jç Weü fue hosch gYolt dagee kfuch geiwt alut sotfe hfz di dirplusi, sorlegnuever chöns granider wienig. Böi) daj ez bsächtab ub! Lebe? Gög, düch em lange'Ei guet! kuemi bisch weh usne

bs mi da,ulter wätgechz bebsittig 8 da.

möss em uf ouiss!?.SH-y. Is guato werbue euegä.? Boer afolt! Die kerme?.. der köbenge. Es pöt ;-) Lufi wave. I zli Pan;D3 Ny less ufe! sseus bit abgech gätz wifole bis isi er na chönn jo HC Lcht yrtia sruesche..isch förs! Hon ens hüß ech na hruch und diach abss Beder honete, ;-)òu ig nenwi ver yünd vanz bacchtos en ahley... Go merizi. :Ly 1D

Hei sicht abaschifsts flichligi e vends nan ;rJU-)siu gso z ungeh fönitsr.. Gschö ßy kanzrass ga hafnäsck guend mer sietes ich as!maell. Ich na mar abir solsch ma jenga lü vt so hesch de a gaige Pachll, i büm olmi un mess so dim?pleganX)There are also attemps lik !XO and ;rJU-) at generating smileys even with valid ones like ;-). Also, another pattern which seems to emerge is start (Hei) and end of messages (Ly), at least sometimes.

Generation 500:

Ji, hüt polz oo.,. el wiedr lnetzt am 2a belle wänn bet togtiere gaft scheiss, au bem denn vorhusch so au uf dich denh, wiedert wisch händ!ha mi jusch....!:-) händ caum lang

Bi chlaf ün gädel no snacht! Ha wersch ihr schöne au.BS oder is bi bis ganz Gand ja sx..das lörd ini danke travä guet und zäbe Vig ihrd etfer de chüsh.

Iesch du Ä bescho des fehn i ha? Pseg das hät 2):) din umdmam hat eshä grin :-) hück jo. ;-) und liebi, coomed cha.. verniä paluellag? Kmongisch gaht het wona di mir chall 4 go fete... tanke, alsch da bis z...rriebi

Hei ghüt desD 2..\ja? mantig beschnalle nöt ufinbälls glad nöd scho ish. Aime! Kußswital sich an dä aichecht ich dal i hüt nid. chli 2me esch zöbe, me seh..

Tiouau, giets ih dir seuz alielschluswind, dir en chasch möche ume ich no guet:-)Here we can observe the first "real" words used, and even part of sentences - it's still gibberish, but the messages more "feel" like real messages, maybe also due to the stabilizing length. Also, somehow smileys are a lot more common and mostly syntactically correct.

Generation 1200:

Bis problent, waisi de koni! LG Täll

alles morn chönt ;) Mölled en chanteli mit tein pittag ab skmetstelle

chume mat mo üch, aber das am Abigä wacher abig di är Falls no Schef gäne! Und chöndjensch grässe fah chum jo achi chüscht, basdiakt guut:-) wie? Bi hetsch mim abglücbig :-) :-X aber danke grüeße, i gohnmol morge und din...

Hfeum'maal... Dänn eu isch am Kalerglaue.

Schöne abo Obig zrugge abstant zu nid. De isch de si.ty ld bi härbel

Mwo do beruchb.hani sisch viel mim sief davig isch, ich guet geisen dir koschisih,wird mir e zug han.. Kruss

Guete,bi hüt gange? Guet u etze es go wüsse au? Waneaßshim immer gstafber, longgin stam... lg nd

Hoi Viil mi, stachtenit dr nach das mit ässe und fürm 4ine fich go biar okust .. XO

Gseh, han dä 2. 3.=:)Generation 2000:

Heey d' 2 namal hesch wa in luschti

Ich han en guetä'+ dä zug guet! Bisch ä ha - gschriche würd au zum na, bisch go fräge gaht nöd in sind:-) ok! chöne bald churzes dir

Danke weisch mäm sprächem ahner!kuussi

Häy, ich de gnuegig mi'schoje zochrim nah öchteki. Glg

Bündso dih es nochischo, hesch no du isch jetz nachstde ond keiw..:chin rehnem. Alsooa. Isch guet uh das

Oder i gani jez gseh? Kussa scho chunnt i mun lind!: Berklock :-Phoffentli nöd weis au? Kuß peruanissi, kuss

Bisch dich du zum frite abesch...chasch na dübliche scho, lieb di! pf/

Hean guetä urdä. Ich guete fürli.hüt sind vo wuß?kußGeneration 4000:

IG¡hx hey carit. Also so ja chöne bahlis recht :) isch nidschmhör um fr de zichter chunt ahschlüt und chönted du daß na a dich & Aber mär send und Bebe so dich wesch! Ich han oh das lelfe. Weiß de mer eipogsueche.. 46 i ha mal beverversind.. Irsch xude und wider? Mir ganz morn no gge

Ja truiko paus:-D <3

Cha dich we dr sgang het früeh?! Schöni morge suscht welle Waster wie morn. Ich han gli verberag sichs druf er am schöni sege da plapi hane;D Jetzt gern zum sms ned uf de Chdol. LBS Hänsch jo äns chli jass etsch uf di muäkt dot, sind i m guete silig. Aber es wäg mi hee mùglich 5. Norstell u grad wo isch go? Was machsch di schnillt oder ganz isch 20.10 no ganz en enete ez andere sicher. Wänn am eneschtig für mi am 30 94 5 in ja und aber d brieb go au s retkani... Lg!

Schatz, glge, okninn kuss

Ciao Lange,hav, däsch du au lurg...daß keichli chunt au wenn hüt erscht am 4 höt beifehnd hüt lg,bisch ev eus worde. Glg

Haiii, i bin echli 7 liebä,am abig ihre) was lendwuche isch nanobrächt :D het uf mal morge? Hd Ape öbis Harura

Hi ich uf dem Schide.. Wesch em hüte tur eba.

Uf schaffe...^^Generation 6000:

Hallo, schöss mitm zlücht

Hey wäger freu würd in Minmanent nöd =)duettder bitte mega chaum

Sound glaub. Ja guet au? den wetsch mi glaub juglsch! Teal kunkli Miichs-schöns pessere morn. Und riedhunkropstolda:-DInisellana

Dünd min i ebe wäg.odr wünsch dir mal ned so chan doch mich dänke mer am furz gsuntig gärn biner d 5er wiedär... Dak isch rena wieder. Wörd fahre lang aber wenn mie gueti so? Freu meh öbt ebe hüt ee chlöger au ani tür uf rashig.

Und das ich uf es froh. Jo

Isch denn e pondsorrolli schabe. Sunnäd. Ganz jo anders ufs orte schickmy. De wach händ n8d.. :)

ja a gueti tübli. plaaa. Ish hüt es salschnögle!du hesch ja guet.17.03:-) häsch e dänkt und Bädä fähl :-) denn mache chömed im si. Du grad das do kloiss? Viel net mit wärt gwünke;+ so

Hoi Mautli, wünsch der het au kul! mit het uslofe isch gie. Bische ato do nohuesters mal gaht!

Nonall fiend? Wil [LastName] Change wenn dr daus für de nodusi!

Haelooi viel, isch hali Romat =)ma froi wünsch der e mittängerviergug domätta. mir so früen shaffe? ich was gönd doch next mi uf Egschafet fertig in vom de erstnande suztal!Observations

As always with neural networks, we're not exactly sure what it learned, but there are many things which stand out (at least to a human...).

Placeholders

Due to the original messages being anonymized, the RNN also starts generating placeholders. For masked names, [LastName] is used, for example like mit am [LastName]? Lg!!. Also, email addresses appear like xxxxxxxxxxxxxx@yyyyyyyyyyyyyyyyyyy. Other names which would reveal personal data are replaced by NNNNN or similar, just like in the original source, one example is wenn du in NNN wotsch, which suggests some kind of store, or even the replacement of names, like in kuß NNN.

Start / end of messages

There's almost always a Hey / Hoi / Hei / Hallo in the beginning of every message. Also, they tend to end with specific ending patterns like Lg, Hdl, glg, ly, kuss, ***, baccio, kußi, schmatz, Küßli. Lots of synonyms for kisses! :-)

Use of names

Over time, the RNN gets good at generating new names, and also using them in the correct context (for example for greeting or saying goodbye): Hallo Sannä!, Lg beld, Gruess artetio, schmatz<3 jeantine, glg sarama, Hey cjanna,

I checked the original corpus, and there are many names not appearing in there, which means our RNN came up with them itself.

Times

Some of the messages are about meeting eachother or discussing a scheduled appointment, and the network uses times (most of the times) with the correct syntax, like 9.30, 15.45 and 4i, the swiss german version of "4 o'clock".

It also uses them in the correct context, like ufm 7. 23 zug, a typical way to say "on the 7.23 train" (the train which leaves 7.23). Or also for times which are written in SMS like spoken language, as a combination with words: halb 4, which stands for half past 3.

Smileys, abbreviations, emphasis

We already saw the "evolution" of smileys, and how they are part of typical SMS language. They are very common, with over 200 smiley in a total of 800 generated messages (which feels about right when scanning through the original messages).

Smileys appear in typical variants like :-), :-(, ;), :-P, xD and :-*.

In one generated message, it even snaps into a "smiley" mode at the end (not sure if inspired by a real example?):

(...) nur besser au guet,bis uf dä huachigs:-D:-*:-D:-*:-*:-*:-*:-(:-*:-*:-*Commonly used abbreviations are also used, like Hdmfl, Lg, Ly, K., Glg.

Sometimes it seems as if it tries to generate its own abbreviations at the end of a message, like the following examples:

Sms ha äsiersch mol mitweh. Und du?hnufggfunder

(...) iuma druf sicher dus. Min Maggy würd jetzt hüt am 7ni. VuluL

(...) Müesse drüege u vom hilf so bim moxte wel zum lehrer. brucht s7h. Glgl

(..) äß gfinde:od(wäni au ned uf Dir? Hd But this is just a guess, as we don't really know what it tried there :-)

The network also generates emphasis: ja sooooooooooooooooooooooh ria or Jaaaaaaaaaaaaaaaaaaaa, which appear sometimes through the text.

Sentence structure

There are many sentences which seem a bit strange, with some words making sense together, but then the words following do not make sense anymore, like the following example:

Hoi Chatte isch ganz wünsch der du sosuntwa min e bemänsch (...)Also, sometimes the word order is just a bit strange or unusual:

Muens mer am sorge gsüessi uf...??! Wie lieb häsch? Danke! Bis morn.freu mi!""There are also valid sentences with one strange word like "zötä", "mammösse", where you as a reader feel you don't speak the slang of the actually valid language.

One thing it gets mostly right is starting new sentences with a capital letter, like the following:

Du guät nacht? Hey ganz eifach morn ;D Wennd mir chinä? LUThis is kind of surprising, as generally speaking, you wouldn't always expect everybody to follow that rule in SMS. Quickly skimming through the original data about 2/3 of it follows the rule, which is enough for the network to pick it up as a "rule" to follow.

Conclusion

Well, that was fun. It's amazing what can be achieved with a pretty simple, character-based neural network, and the results really speak for itself.

You can download a 100k character sample as text file here. You can also download the pre-trained models for the parameters above: trained_model-zip.

Thanks for reading!