Indexing PDFs with Tesseract

Tesseract is an OCR software developed by Google (since 2006) and very well known, also due to its interesting history, but mostly as one of (if not the) best open source OCR tool available.

I've always wanted to give it a try, and when I faced the problem of combing through my old study notes and not finding a particular piece of text, I thought if it again.

Here's the PDF document I used for testing, 40 pages of scanned documents: morphologie.pdf

The naive approach

At first, I thought maybe my idea was an overkill, and having already worked with PyPDF I knew it had some functionality to extract text out of a PDF document. So I tried that (needs pip3 install PyPDF2 to work):

import PyPDF2

pdf = PyPDF2.PdfFileReader(open('morphologie.pdf', 'rb'))

for page in pdf.pages:

content = page.extractText()

print('Extracted text:', content)And the output was:

Extracted text: So no luck here - I had the justification to do the OCR myself, yay :-)

Getting the image data

For getting the text from the PDF, I first needed the image data.

I looked a bit around and the fastest way seemd to be with PyMuPDF, and I used a simplified version of this SO answer:

import fitz

doc = fitz.open('morphologie.pdf')

for page in range(len(doc)):

for img in doc.getPageImageList(page):

xref = img[0]

pix = fitz.Pixmap(doc, xref)

pix.writePNG('img/p%s.png' % page)This would extract all images into a img folder:

Running Tesseract

Next up was using Tesseract, so I first needed to get a current version.

I noticed that I had an older version (v3.05.01), so I upgraded it via brew:

brew upgrade tesseractSomehow this took nearly two hours (not sure why, maybe hombrew updated itself too..?), but after installing, tesseract -v would yield tesseract 4.1.1 (with leptonica-1.80.0), so everything worked.

So then I wanted to actually use Tesseract:

$ tesseract img/p0.png stdout

Error opening data file ./tessdata/eng.traineddata

Please make sure the TESSDATA_PREFIX environment variable is set to your "tessdata" directory.

Failed loading language 'eng'

Tesseract couldn't load any languages!

Could not initialize tesseract.Apparantly, training data (the trained models) are also needed. They can be downloaded at Github: tesseract-ocr/tessdata. I decided to get both deu.traineddata and eng.traineddate.

After putting those two files in a folder tessdata I tried again, with specifying the language:

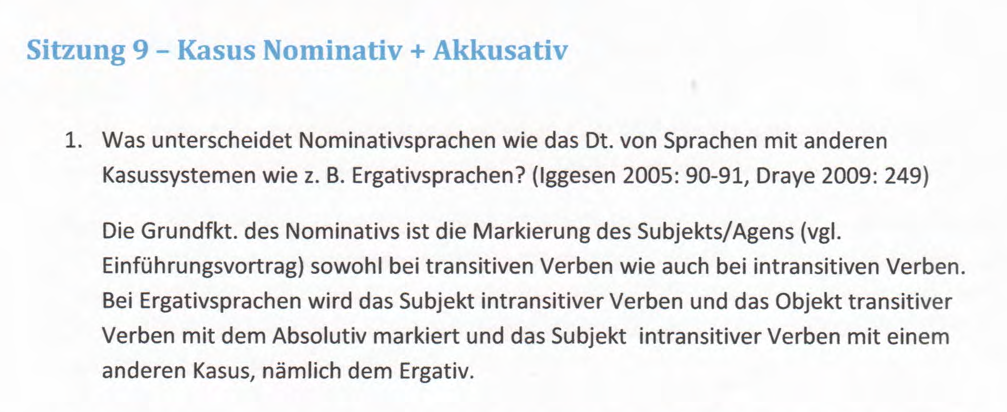

$ TESSDATA_PREFIX="./tessdata" tesseract img/p28.png stdout -l deuFor page 28, which looks the following...

...Tesseract generated the following output:

Sitzung 9 - Kasus Nominativ + Akkusativ

1. Was unterscheidet Nominativsprachen wie das Dt. von Sprachen mit anderen

Kasussystemen wie z. B. Ergativsprachen? (Iggesen 2005: 90-91, Draye 2009: 249)

Die Grundfkt. des Nominativs ist die Markierung des Subjekts/Agens (vgl.

Einführungsvortrag) sowohl bei transitiven Verben wie auch bei intransitiven Verben.

Bei Ergativsprachen wird das Subjekt intransitiver Verben und das Objekt transitiver

Verben mit dem Absolutiv markiert und das Subjekt intransitiver Verben mit einem

anderen Kasus, nämlich dem Ergativ.Which is an outstanding result for an unoptimized image! Not all the pages were recognized as good as this part, but on many of them there are also handwritten notes or highlightings.

I dug a bit into the different modes of running Tesseract (-psm option) but ultimately, the default provided already pretty solid results. The modes would be (from the docs):

0 = Orientation and script detection (OSD) only.

1 = Automatic page segmentation with OSD.

2 = Automatic page segmentation, but no OSD, or OCR. (not implemented)

3 = Fully automatic page segmentation, but no OSD. (Default)

4 = Assume a single column of text of variable sizes.

5 = Assume a single uniform block of vertically aligned text.

6 = Assume a single uniform block of text.

7 = Treat the image as a single text line.

8 = Treat the image as a single word.

9 = Treat the image as a single word in a circle.

10 = Treat the image as a single character.

11 = Sparse text. Find as much text as possible in no particular order.

12 = Sparse text with OSD.

13 = Raw line. Treat the image as a single text line,

bypassing hacks that are Tesseract-specific.Scripting it together with Python

For building the index, the command above is wrapped into a subprocess call, and then called for each page. The resulting text is saved into a simple json file, so that we can access it later. The whole processing of 40 pages takes about 3 minutes on my MacBook Pro.

import subprocess

import glob

import os

import json

import time

start_time = time.time()

# Set tessdata environment variable

os.environ["TESSDATA_PREFIX"] = './tessdata'

pages = {}

for image in glob.glob('img/*.png'):

print('Indexing image:', image)

page = image.split('/')[1].split('.')[0]

t = subprocess.run(['tesseract', image, 'stdout', '-l', 'deu'], stdout=subprocess.PIPE)

text = t.stdout.decode('utf-8')

print('Ran tesseract, found content with length:', len(text))

pages[page] = text

with open('index.json', 'w') as write_file:

write_file.write(json.dumps(pages))

print('Finished indexing', len(pages.keys()), 'pages in', time.time() - start_time, 's')After the index is built, we want to search it:

import json

pages = {}

with open('index.json', 'r') as read_file:

pages = json.loads(read_file.read())

print('Search index.json, type "quit" to quit.\n')

query = ''

while query != 'quit':

query = input('Search for term: ')

matches = []

for page, text in pages.items():

if query.lower() in text.lower():

matches.append(page)

print('Found query on pages:', ', '.join(matches))Some examples:

Search index.json, type "quit" to quit.

Search for term: Kasus

Found query on pages: p28, p2, p0, p30

Search for term: Verb

Found query on pages: p10, p28, p14, p17, p16, p8, p3, p33, p26, p32, p24, p31, p22, p23

Search for term: Pluralbildung

Found query on pages: p17, p0, p1, p27, p26, p24, p18, p19, p21, p22

Search for term: semantisch

Found query on pages: p13, p10, p29, p9, p8, p5, p4, p7, p30, p31, p21, p20

Search for term: hebräisch

Found query on pages: p5

Search for term: Zugehörigkeit

Found query on pages: p33Conclusion

So with around 100 lines of Python, we got ourselves a useful way to index and search through PDF files. Of course this will not work for handwritten pages (at least with my handwriting...) and not for all kind scanning qualities, but still, Tesseract does a very impressive job for general text recognition.

Thanks for reading!